- Research Context

The project "Portability and Performance in Heterogeneous Many Core Systems", Ref. PTDC/EIA-EIA/100035/2008, was a R&D project, funded by the portuguese Foundation for Science and Technology (FCT), developed from May 2010 to June 2013. The main contributions initially planned included: i) a better understanding of the addressed computing paradigms (for CPUs and specialized coprocessors) and their suitability to perform interactive global illumination (IGI); ii) development and assessment of a performance model and scheduling mechanism on heterogeneous many-core distributed systems; iii) a specification and support for a distributed memory model on top of OpenCL; iv) design, evaluation and dissemination of an efficient, adaptive and portable OpenCL IGI engine.

Contribution iii) was the main target of a specific task of the project (T5 - Distributed Memory), and clOpenCL (cluster OpenCL) is the main byproduct of that task.

- Distributed Heterogeneous Computing with clOpenCL

Clusters of heterogeneous computing nodes provide an opportunity to significantly increase the performance of parallel and High-Performance Computing (HPC) applications, by combining traditional multi-core CPUs coupled with accelerator devices, interconnected by high throughput and low latency networking technologies. However, developing efficient applications to run in clusters that integrate GPUs and other accelerators often requires a great effort, demanding programmers to follow complex development methodologies in order to suit algorithms and applications to the new heterogeneous parallel environment.

OpenCL is an open programming standard for heterogeneous computing. It suffers, however, from a major limitation: applications can only make use of the local compute devices, present on a single machine. clOpenCL (cluster OpenCL) expands the original single-node OpenCL model, by enabling the deployment and execution of OpenCL applications in clusters of heterogeneous nodes.

Compared with similar projects, clOpenCL has two advantages: i) it is able to take full advantage of commodity networking hardware through Open-MX, and ii) programmers/users do not need special privileges neither exclusive access to scarce resources (e.g., accelerators) to deploy the desired running environment.

- Architecture

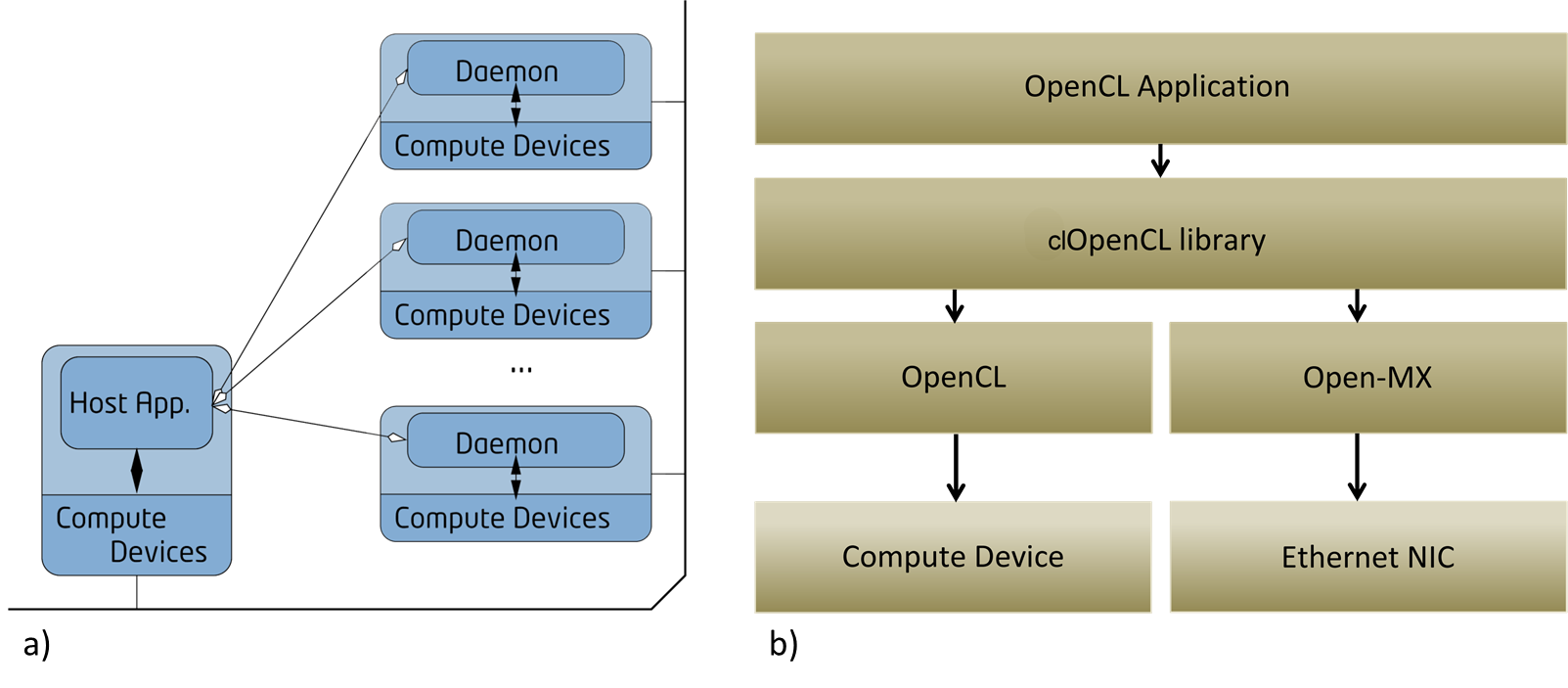

Figure 1 - clOpenCL (a) operation model, (b) host application layers.

An OpenCL application comprises an host program and a set of kernels intended to run on compute devices. Figure 1 presents the a) clOpenCL operation model, where a single host program interacts with multiple compute devices (local or remote), and b) the software/hardware layers present in the host program.

Every call to an OpenCL primitive is intercepted by the clOpenCL wrapper library which redirects its execution to a specific clOpenCL daemon at a cluster node or to the local OpenCL runtime. clOpenCL daemons are simple OpenCL programs that handle remote calls and interact with local devices. Each user spawns its own set of daemons, eventually sharing particular compute devices with other cluster users.

A typical clOpenCl application starts at a particular cluster node and will create OpenCl objects in cluster nodes. For each object, the clOpenCL wrapper library returns a ``fake pointer" used as a global identifier, and stores the real pointer along with the corresponding daemon location. The exchange of data between the wrapper library and remote daemons uses Open-MX, an open-source message passing stack over generic Ethernet, which provides low-level communication mechanisms at user-level space and allows to achieve low latency communication and low CPU overhead.

- Usage/Evaluation Example

Using clOpenCL

Porting OpenCL programs to clOpenCL, only requires linking to the OpenCL and clOpenCL libraries. This is accomplished by taking advantage of the GCC directives, -Xlinker --wrap, for function wrapping during link-time. Currently, clOpenCL does not support mapping buffer and image objects. However, as its current state, the clOpenCL platform is enough to the purpose of testing its general concept, including running basic OpenCL applications [ARPS12], like a kernel for matrix multiplication.

Evaluation TestBed

A small commodity cluster, with 4 computing nodes, each one with an Intel Core 2 Quad Q9650 3GHz CPU, 8Gb of RAM and two Ethernet 1Gbps NICs (on-board Intel 82566DM-2 and a PCI64 SysKonnect SK-9871); plus, the nodes are fitted with NVIDIA GTX 460 GPUs (1Gb of GDDR5 RAM): 1 node (compute-4-0) with 2 GPUs and the 3 remaining nodes (compute-4-1 to compute-4-3) with 1 GPU each.

All cluster systems run Linux Rocks [Gro12d] (version 5.4.3). The specific OpenCL platform and GPU driver versions used were AMD SDK 2.6 with driver 11.12, and CUDA 4.1.28 with driver 285.05.33. Open-MX 1.5.2 was used with the SysKonnect NICs (that provide better performance than the on-board Intel NICs).

Case Study

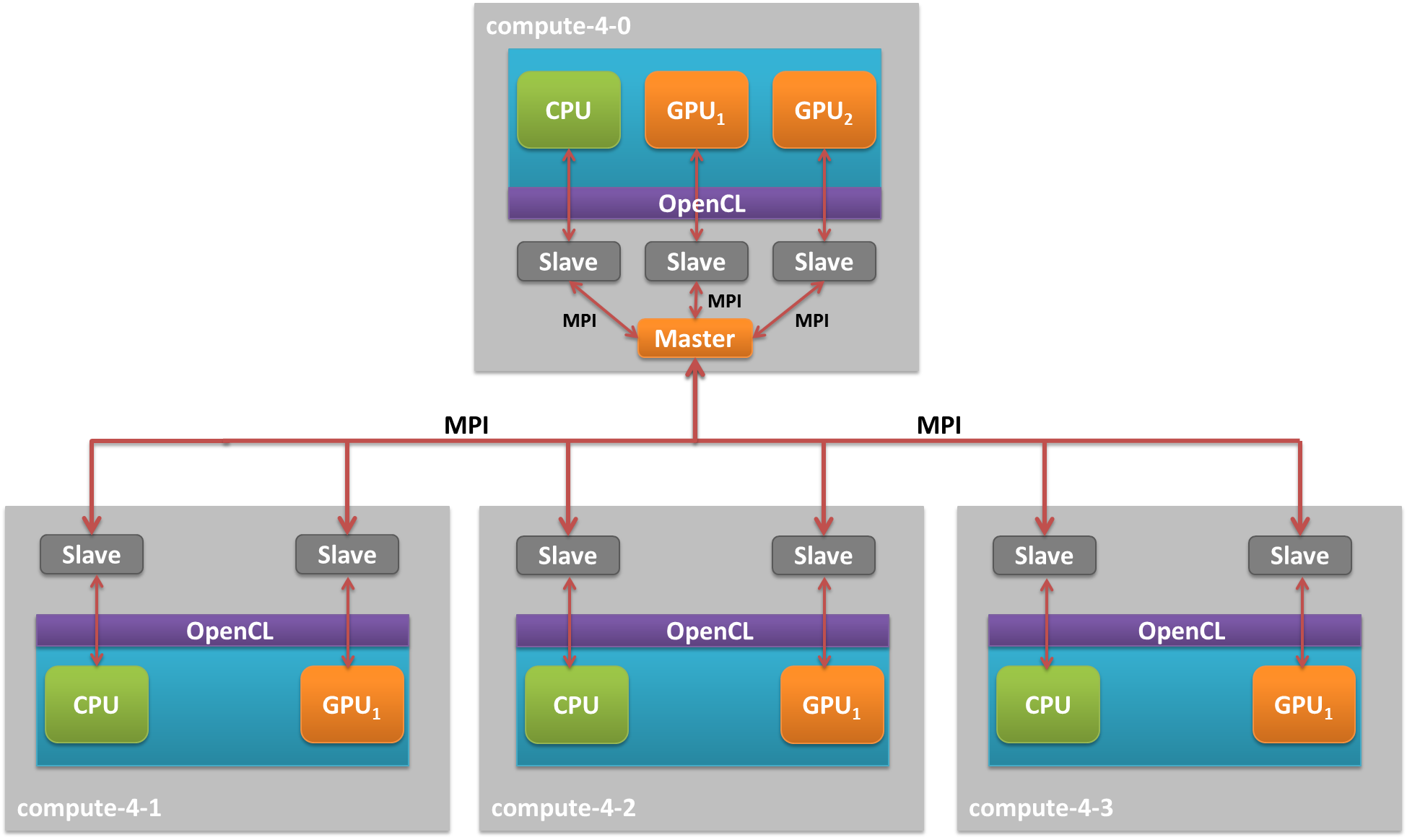

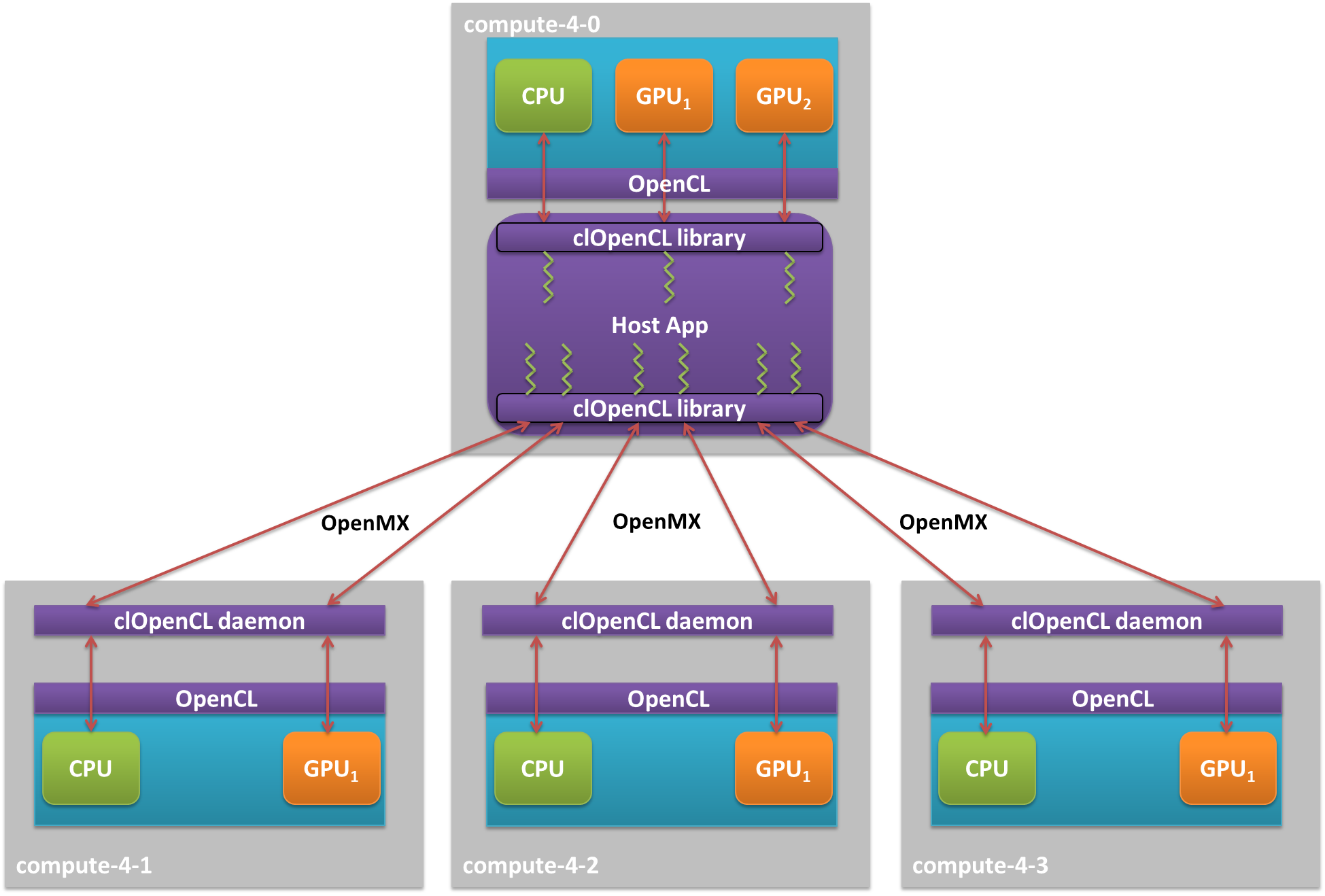

The Matrix Product algorithm is a reference HPC "benchmark" algorithm. Although simple, this "embarrassingly parallel" is sufficient to test the scalability and correctness of the current clOpenCL implementation. This algorithm was evaluated in three different distributed configurations: i) a typical MPI implementation (MPI-Only) using, in each MPI task, the BLAS multiplication version from the ATLAS library [Soul12] (previously optimized for our cluster); ii) a second MPI implementation (MPI-with-OpenCL), where each MPI task exploits local OpenCL devices for the sub-matrices multiplication (Figure 2); iii) a clOpenCL implementation, where distributed Posix threads interact with OpenCL devices for the same purpose (Figure 3).

Figure 2 - deployment scenario for the MPI-with-OpenCL approach.

Figure 3 - deployment scenario for the clOpenCL approach.

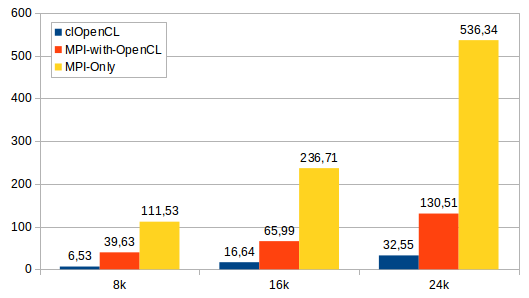

The results from this evaluation (Figure 4) prove that the clOpenCL approach is able to out-perform other typical hybrid approaches to solve the same problem.

Figure 4 - multiplication times (seconds) for square matrices of order 8k, 16k and 24k.

- Publications

- [ARPS12] Alves, Albano; Rufino, José; Pina, António; Santos, Luís Paulo; "clOpenCL - Supporting Distributed Heterogeneous Computing in HPC Clusters"; 10th Int. Workshop HeteroPar'2012 -Algorithms, Models and Tools for Parallel Computing on Heterogeneous Platforms; Lecture Notes in Computer Science 7640 (Euro-Par 2012 Workshops), Springer, Heidelberg, pp. 112-122, 2012 ; [http://dx.doi.org/10.1007/978-3-642-36949-0_14] [Presentantion available HERE]

- Master Thesis

- Tiago Filipe Rodrigues Ribeiro; "Developing and Evaluating clOpenCL Applications for Heterogeneous Clusters"; Master Thesis, Polytechnic Institute of Bragança, Nov 2012; [http://hdl.handle.net/10198/7948]

- Mário João da Costa Afonso; "clOpenCL - OpenCL para ambiente cluster"; Master Thesis, Polytechnic Institute of Bragança, Nov 2012; [http://hdl.handle.net/10198/8066]